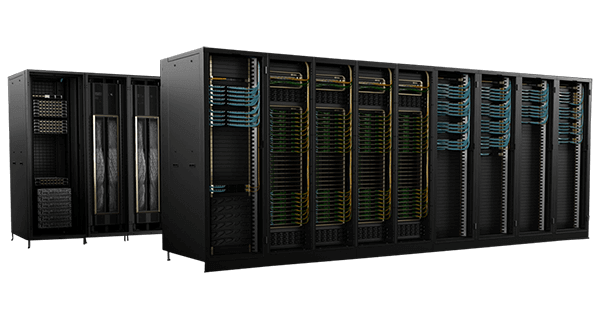

NVIDIA DGX SuperPOD™ with DGX GB200 systems is purpose-built for training and inferencing trillion-parameter generative AI models. Each liquid-cooled rack features 36 NVIDIA GB200 Grace Blackwell Superchips–36 NVIDIA Grace CPUs and 72 Blackwell GPUs–connected as one with NVIDIA NVLink. Multiple racks connect with NVIDIA Quantum InfiniBand to scale up to tens of thousands of GB200 Superchips.

NVIDIA® DGX SuperPOD™ with DGX™ GB200 Systems

The Best of NVIDIA AI in One Place

NVIDIA® DGX SuperPOD™ with DGX™ GB200 Systems

Giant Memory for Giant Models

Unlike existing AI supercomputers that are designed to support workloads that fit within the memory of a single system, NVIDIA DGX GH200 is the only AI supercomputer that offers a shared memory space of 19.5TB across 32 Grace Hopper Superchips, providing developers with over 30X more fast-access memory to build massive models. DGX GH200 is the first supercomputer to pair Grace Hopper Superchips with the NVIDIA NVLink Switch System, which allows 32 GPUs to be united as one data-center-size GPU. Multiple DGX GH200 systems can be connected using NVIDIA InfiniBand to provide even more computing power. This architecture provides 10X more bandwidth than the previous generation, delivering the power of a massive AI supercomputer with the simplicity of programming a single GPU.

Super Power-Efficient Computing

As the complexity of AI models has increased, the technology to develop and deploy them has become more resource intensive. However, using the NVIDIA Grace Hopper Superchip architecture, DGX GH200 achieves excellent power efficiency. Each NVIDIA Grace Hopper Superchip is both a CPU and GPU in one unit, connected with superfast NVIDIA NVLink-C2C. The Grace™ CPU uses LPDDR5X memory, which consumes one-eighth the power of traditional DDR5 system memory while providing 50% more bandwidth than eight-channel DDR5. And being on the same module, the Grace CPU and Hopper™ GPU interconnect consumes 5X less power and provides 7X the bandwidth compared to the latest PCIe technology used in other systems.

ACCESS OUR AI LAB

Experience our HPC and AI solutions.

Modular solutions for multiple users and diverse workloads. Reference architecture for quick deployment and quick updates. Easy to manage configurations allowing workload orchestration